It Didnt Go Through Try Again Later Robots Txt

The use of a robots.txt file has long been debated amid webmasters as it can prove to be a stiff tool when it is well written or 1 tin shoot oneself in the foot with it. Unlike other SEO concepts that could exist considered more abstruse and for which we don't accept clear guidelines, the robots.txt file is completelydocumented by Google and other search engines.

You need a robots.txt file

onlyif you take sure portions of your website that y'all don't want to be indexed and/or you lot need to block or manage diverse crawlers.

*tks to Richard for the correction on the text above. (cheque the comments for more info) What's important to sympathise in the instance of the robots file is the fact that it doesn't serve equally a police force for crawlers to obey to, information technology's more of a signpost with a few indications. Compliance with those guidelines can lead to a faster and ameliorate indexation by the search engines, and mistakes, hiding of import content from the crawlers, will eventually lead to a loss of traffic and indexation bug.

Robots.txt History

We're sure most of y'all are familiar with robots.txt by now, but just in case yous heard about information technology a while ago and since have forgotten about it, the Robots Exclusion Standards every bit it's formally known, is the fashion a website communicates with the spider web crawlers or other web robots. It's basically a text file, containing curt instructions, directing the crawlers to or away from certain parts of the website. Robots are usually trained to search for this document when they reach a website and obey its directives. Some robots do not comply with this standard, like e-mail harvesters, spambots or malware robots that don't have the all-time intentions when they reach your website.

It all started in early 1994 when Martijn Koster created a web crawler that caused a bad case of the DDOS on his servers. In response to this, the standard was created to guide web crawlers and block them from reaching certain areas. Since and so, the robots file evolved, contains additional information and accept a few more uses, but we`ll get to that later on on.

How Important Is Robots.txt for Your Website?

To become a better agreement of information technology, call up of robots.txt every bit a bout guide for crawlers and bots. It takes the non human visitors to the amazing areas of the site where the content is and shows them what is important to be and not to be indexed. All of this is washed with the assistance of a few lines in a txt file format. Having a well experienced robot guide can increase the speed at which the website is indexed, cut the time robots go through lines of code to notice the content the users are looking for in the SERPs.

More information has been included in the robots filethrough out the time that helps the webmasters to go a faster crawling and indexation of their website.

Present most robots.txt files include the sitemap.xml address that increases the clamber speed of bots. We managed to find robot files containing job recruitment ads, injure people feelings and even instructions to educate robots for when they get cocky-conscious. Proceed in heed that even though the robots file is strictly for robots, it's still publicly available for anyone who does a /robots.txt to your domain. When trying to hibernate from the search engines private information, you just show the URL to anyone who opens the robots file.

How to Validate Your Robots.txt

Beginning affair once you have your robots file is to brand sure it is well written and to check for errors. 1 mistake here can and will cause you a lot of impairment, then after you've completed the robots.txt file take extra intendance in checking for whatsoever mistake in it.Most search engines provide their own tools to check the robots.txt files and even allow you lot to come across how the crawlers see your website.

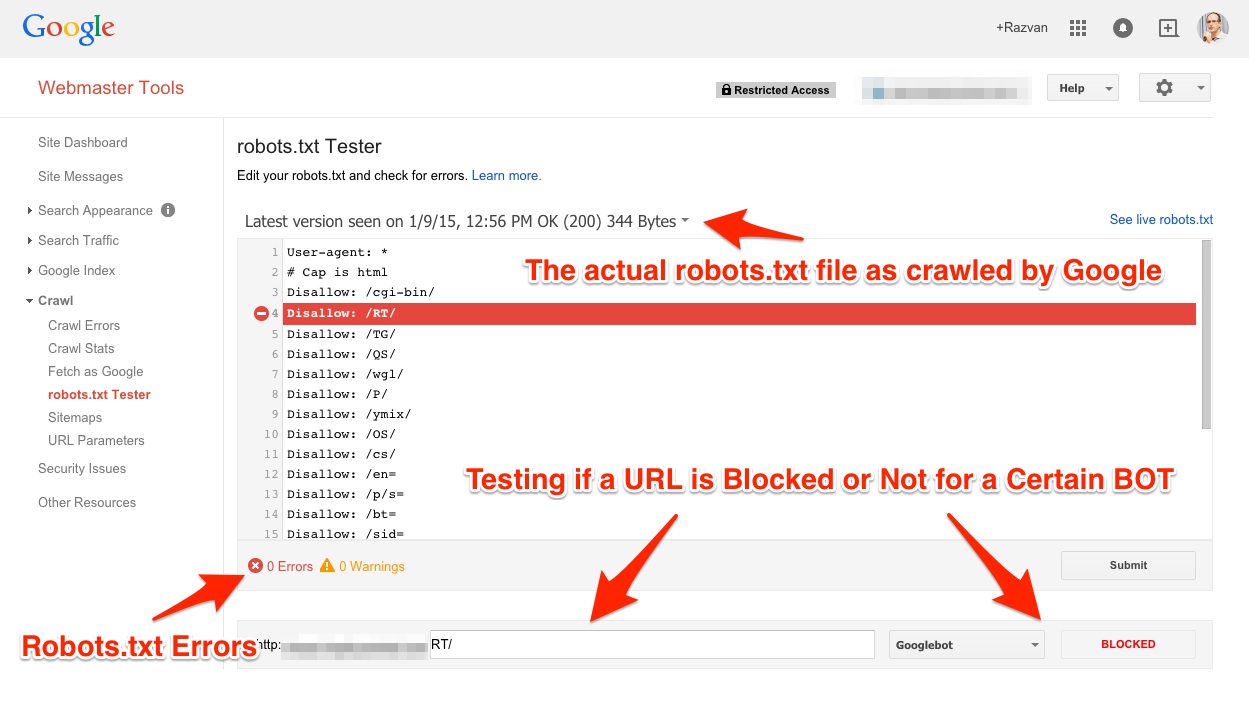

Google's Webmaster Tools offers the robots.txt Tester, a tool which scans and analyzes your file. As yous can see in the paradigm beneath, yous can use the GWT robots tester to cheque each line and see each crawler and what admission it has on your website. The tool displays the date and time the Googlebot fetched the robots file from your website, the html lawmaking encountered, likewise as the areas and URLs information technology didn't have access to. Any errors that that are institute past the tester need to be stock-still since they could lead to indexation problems for your website and your site could not appear in the SERPs.

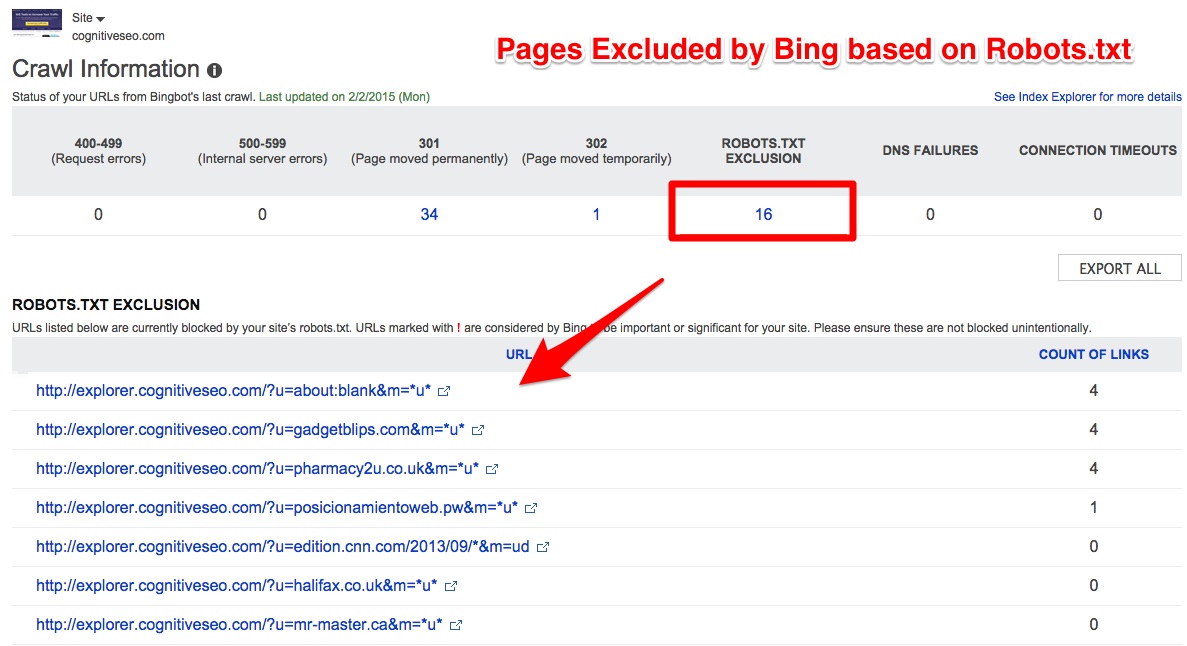

The tool provided by Bing displays to you the data equally seen past the BingBot. Fetching as the Bingbot fifty-fifty shows your HTTP Headers and page sources as they look like for the Bingbot. This is a great way to find out if your content is actually seen by the crawler and not hidden by some error in the robots.txt file. Moreover, you can test out each link past adding information technology manually and if the tester finds any issues with information technology, it will display the line in your robots file that blocks information technology.

Retrieve to accept your time and advisedly validate each line of your robots file. This is the showtime step in creating a well written robots file, and with the tools at your disposal you really have to effort hard to make any mistakes here. About of the search engines provide a "fetch as *bot" selection and then subsequently you've inspected the robots.txt file by yourself, be certain to run information technology through the automatic testers provided.

Be Sure You lot Do Not Exclude Of import Pages from Google's Alphabetize

Having a validated robot.txt file is not enough to ensure that you have a keen robots file. We tin can't stress this enough, only having one line in your robots that blocks an of import content part of your site from being crawled can harm you. So in order to make certain you practise not exclude important pages from Google's index you can use the same tools that yous used for validating the robots.txt file.

Fetch the website as the bot and navigate information technology to make sure you oasis't excluded important content.

Before inserting pages to be excluded from the optics of the bots, make sure they are on the post-obit list of items that concur niggling to no value for search engines:

- Code and script pages

- Private pages

- Temporary pages

- Any page you believe holds no value for the user.

What we recommend is that yous have a articulate plan and vision when creating the website's architecture to make it easier for you to disallow the folders that hold no value for the search crawlers.

How to Track Unauthorized Changes in Your Robots.txt

Everything is in place now, robots.txt file is completed, validated and you fabricated sure that you have no errors or of import pages excluded from Google crawling. The next step is to make certain that nobody makes whatever changes to the document without you knowing information technology. It's non only about the changes to the file, you lot also need to be enlightened of any errors that appear while using the robots.txt document.

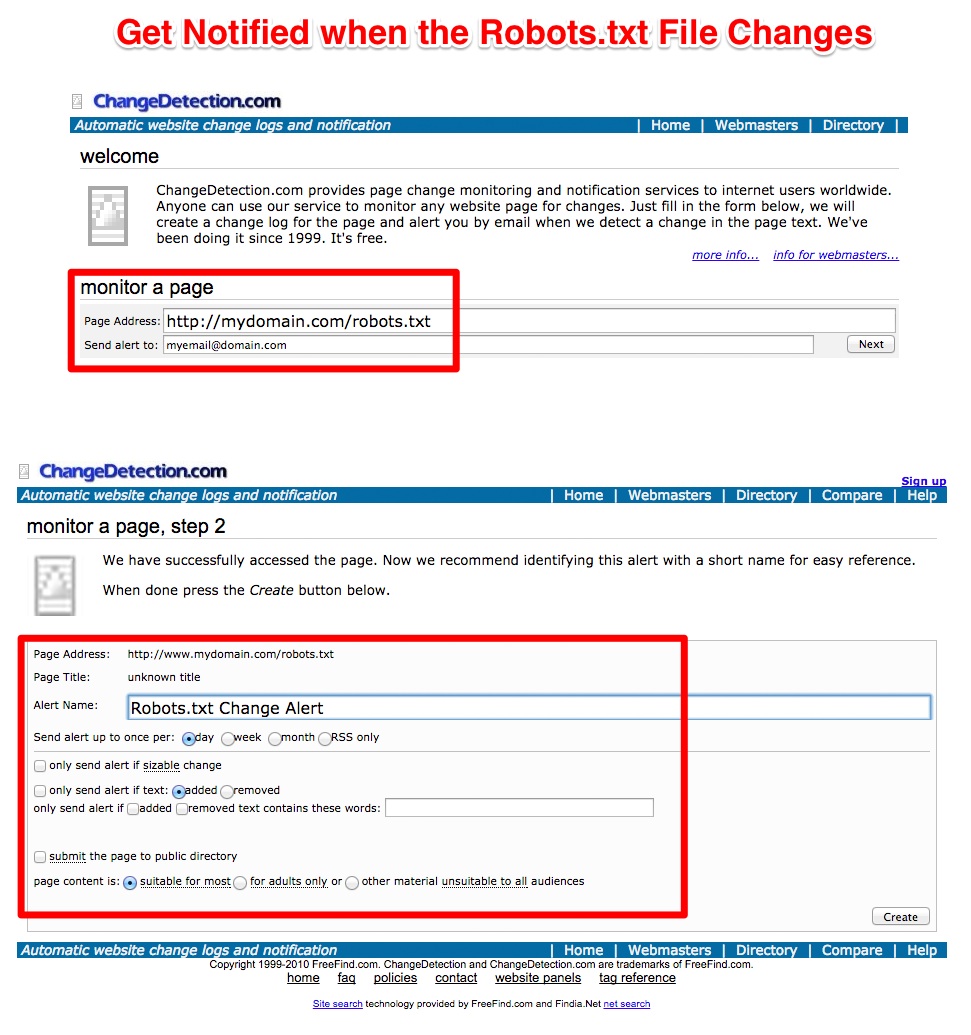

one. Alter Detection Notifications – Gratuitous Tool

The showtime tool we want to recommend is changedetection.com. This useful tool tracks any changes fabricated to a page and automatically sends an email when it discovers one. Beginning affair you have to do is insert the robots.txt address and the email address you want to exist notified on. The adjacent footstep is where you are allowed to customize your notifications. You are able to change the frequency of the notifications and set up alerts but if certain keywords from the file have been changed.

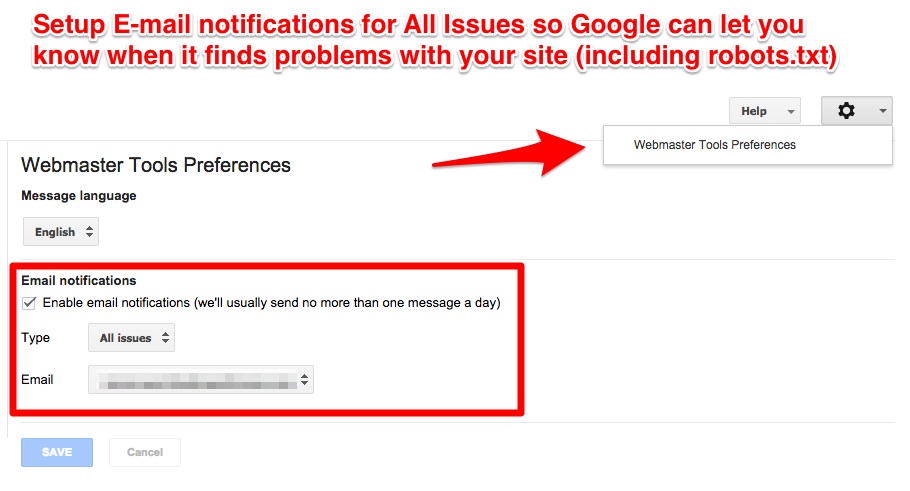

2. Google Webmaster Tools Notifications

Google Webmaster Tools provides an additional alert tool . The difference fabricated by using this tool is that it works by sending you lot notifications of any fault in your lawmaking each time a crawler reaches your website. Robots.txt errors are also tracked and you volition receive an e-mail each time an upshot appears. Hither is an in-depth usage guide for setting upward the Google Webmaster Tools Alerts.

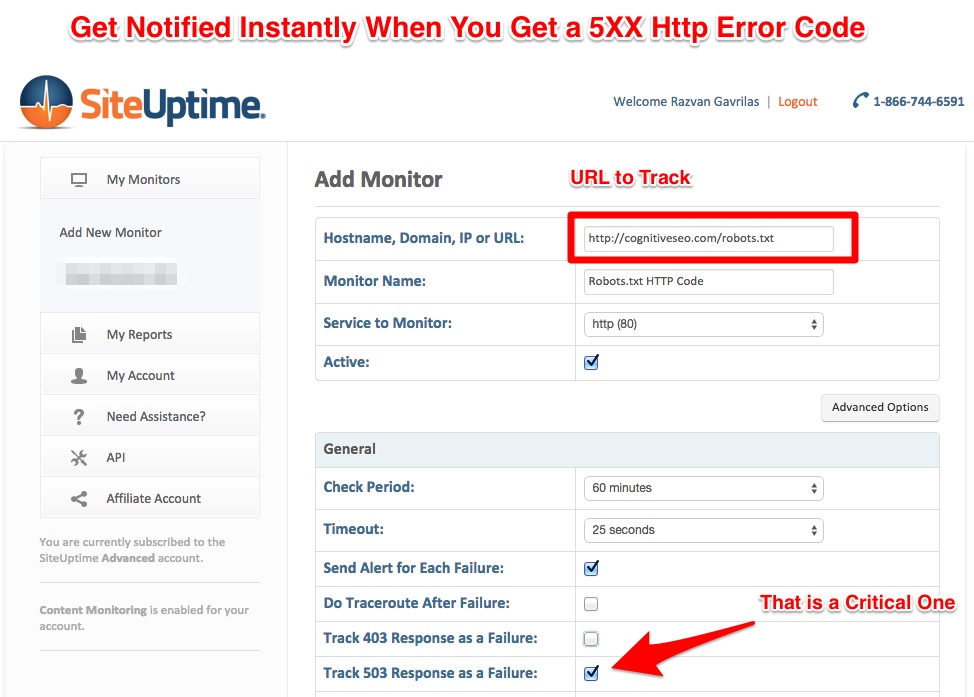

3. HTML Error Notifications – Free & Paid Tool

In order to not shoot yourself in the human foot when making an robots.txt file, only these html error codes should be displayed.

-

The 200 lawmaking, basically ways that the page was found and read;

-

The 403 and 404 codes, which mean that the page was not found and hence the bots will call back you take no robots.txt file. This will cause the bots to crawl all your website and index it appropriately.

The SiteUptime tool periodically checks your robots.txt URL and is able to instantly notify you if it encounters unwanted errors. The disquisitional error you want to go on rail of is the 503 i.

A 503 mistake indicates that at that place is an error on the server side and if a robot encounters information technology, your website volition not exist crawled at all.

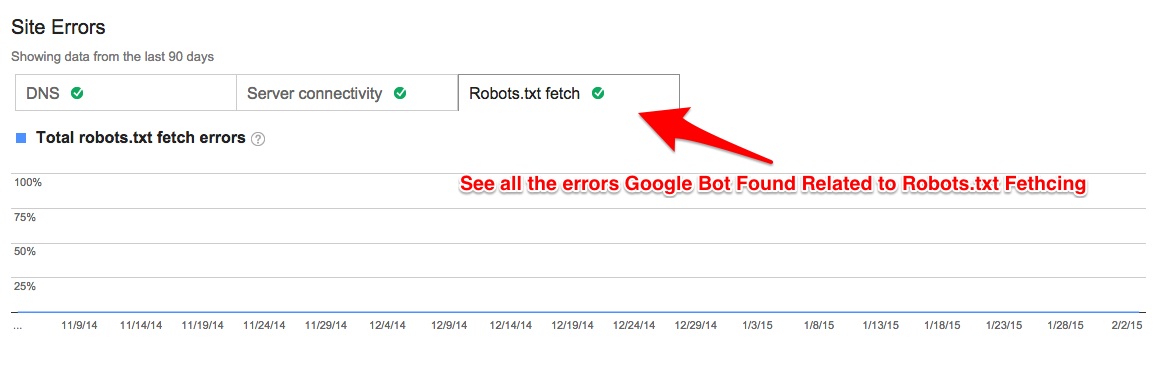

The Google Webmaster Tools also provides abiding monitoring and shows the timeline of each time the robots file was fetched. In the chart, Google displays the errors it found while reading the file; we recommend you look at it once in a while to check if information technology displays any other errors other than the ones listed above. As nosotros can see below the Google webmaster tools provides a nautical chart detailing the frequency the Googlebot fetched the robots.txt file as well equally whatsoever errors it encountered while fetching it.

Critical Yet Mutual Mistakes

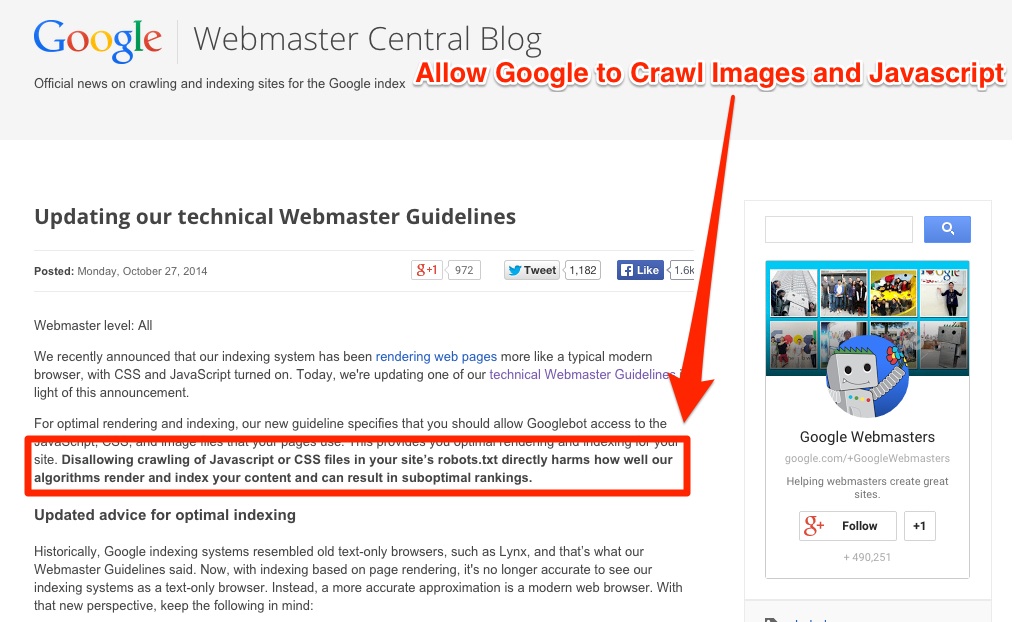

1. Blocking CSS or Image Files from Google Crawling

Final year, in Oct,Google stated that disallowing CSS, Javascriptand even images (nosotros've written an interesting article about it) counts towards your website'south overall ranking . Google'due south algorithm gets better and better and is now able to read your website's CSS and JS code and draw conclusions about how useful is the content for the user. Blocking this content in the robots file can cause you some harm and volition not let yous rank as high as you probably should.

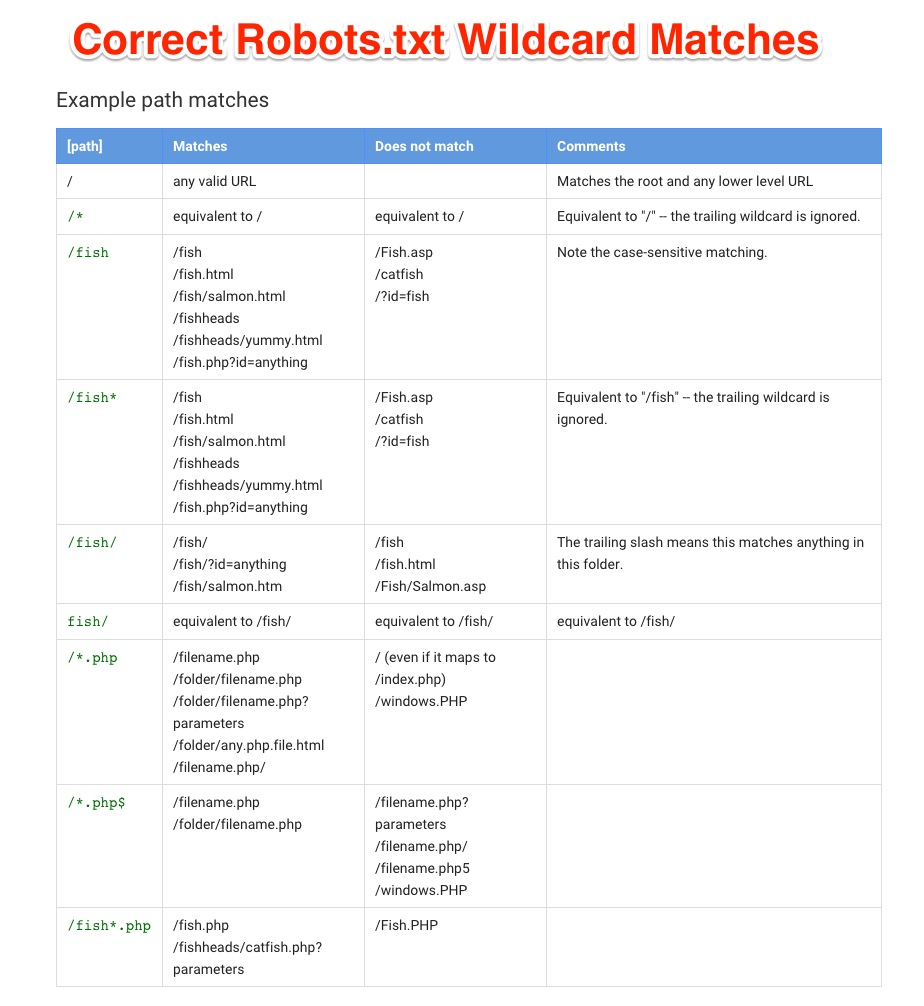

ii. Wrong Use of Wildcards May De-Index Your Site

Wildcards, symbols like "*" and "$", are a valid option to block out batches of URLs that you believe agree no value for the search engines. Most of the large search engine bots discover and obey by the use of it in the robots.txt file. Also it's a skilful way to block admission to some deep URLs without having to list them all in the robots file.

So in case you wish to cake, lets say URLs that have the extension PDF, you lot could very well write out a line in your robots file with User-agent: googlebot

Disallow: /*.pdf$

The * wildcard represents all available links which stop in .pdf, while the $ closes the extension. A $ wildcard at the terminate of the extension tells the bots that only URLs ending in pdf shouldn't be crawled while any other URL containing "pdf" should be crawled (for example pdf.txt).

Screenshot taken from developers.google.com

*Note: Like any other URL the robots.txt file is instance-sensitive and then take this into consideration when writing the file.

Other Employ Cases for the Robots.txt

Since it's commencement advent, the robots.txt file has been found to have another interesting uses past some webmasters. Permit'south have a await at other useful means someone could take reward of the file.

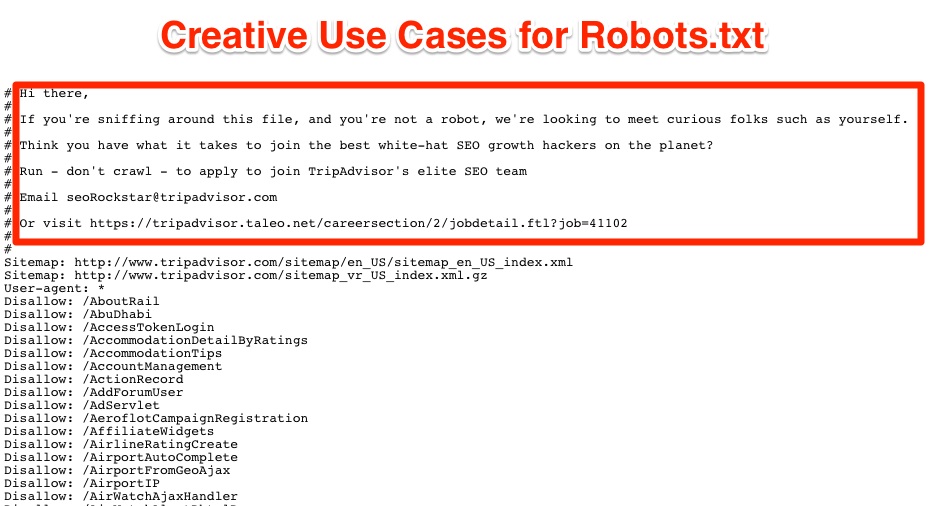

i. Hire Awesome Geeks

Tripadvisor.com's robotos.txt file has been turned into a hidden recruitment file. Information technology'southward an interesting way to filter out only the "geekiest" from the bunch, and finding exactly the right people for your company. Let's face up it, it is expected nowadays for people who are interested in your company to take extra time in learning about it, but people who even stalk for subconscious messages in your robots.txt file are amazing.

2. Stop the Site from Being Hammered by Crawlers

Some other utilize for the robots file is to terminate those pesky crawlers from eating upward all the bandwidth. The control line Clamber-delay can be useful if your website has lots of pages. For example if your website has about thousand pages, a web crawler can clamber your whole site in several minutes. Placing the command line Crawl-delay: 30 will tell them to take information technology a bit piece of cake, apply less resources and you'll have your website crawled in a couple of hours instead of few minutes.

We don't really recommend this use since the Google doesn't really take into consideration the crawl-delay command, since the Google Webmaster Tools has an in-built crawler speed tuning function. The uses for the Clamber-filibuster function piece of work best for other bots like Ask, Yandex and Bing.

iii. Disallow Confidential Data

To disallow confidential information is a chip of a double edged sword. It's great non to permit Google access to confidential information and display information technology in snippets to people who you don't want to have admission to information technology. But, mainly because not all robots obey the robots.txt commands, some crawlers tin can nevertheless have admission to information technology. Similarly, if a human with the wrong intents in heed, searches your robots.txt file, he will be able to speedily discover the areas of the website which agree precious information. Our communication is to use it wisely and have actress care with the data you lot place there and call up that not simply robots have access to the robots.txt file.

Determination

It'due south a great case of "with swell power, comes greater responsibility", the ability to guide the Googlebot with a well written robot file is a tempting 1. Every bit stated below the advantages of having a well written robots file are bang-up, better clamber speed, no useless content for crawlers and fifty-fifty task recruitment posts. Simply keep in mind that one little mistake can cause y'all a lot of harm. When making the robots file have a clear paradigm of the path the robots take on your site, disallow them on certain portions of your website and don't make the mistake of blocking important content areas. Too to remember is the fact that the robots.txt file is not a legal guardian, robots do not have to obey it, and some robots and crawlers don't even bother to search for the file and just crawl your entire website.

Source: https://cognitiveseo.com/blog/7052/critical-mistakes-in-your-robots-txt-will-break-your-rankings-and-you-wont-even-know-it/